TLDR:

- We are calling Scala Native from Java using FFM, with jextract and sn-bindgen generating bindings

- Github repo for the example

- Batteries included SBT template

- sbt-jextract that powers the template

In the previous blogpost, we achieved the questionable success of being able to run a JVM inside of our Scala Native process and interact with it using JNI. Justifying that complexity is pretty hard, at least without something that generates tons of low level code required to interface with JVM to do even simpler stuff.

But what if we reverse the direction of interoperability? Let's see how we can call Scala Native code from modern Java.

Your options for Java interop with native libraries

At first, there was JNI (yes, same thing as in previous post!), which allowed you to mark some methods as native and all you had to do was to provide an implementation of that native method in a shared library. This technically allowed you to create an interface to any C library as long as you carefully wrapped all the necessary methods in specially named JNI counterparts. But that's not all – Java cannot load dynamic libraries from classpath, so even if you shipped all the various dynamic libraries (that implement your native interface) with your Java library, you'd still need to use something like jniloader that would unpack the libraries somewhere on disk and load them.

The situation annoyed some people, and JNA was created – it shipped a special universal native library and unpacking mechanism, alongside some Java annotations that allowed you to define a C-like interface purely in Java code, making those methods much easier to work with. JNA is a marvel of engineering, and works quite well, but it still has to ship a native library, and if you want to build your app into a GraalVM Native Image, you'll have to generate and adjust some configs which are not pleasant to work with.

In Java 23 we finally have a better native solution – Foreign Function and Memory API. It is a solid API, that makes it much easier to create interop points with C code, manage off-heap memory, deal with memory layouts, pass Java functions as C function pointers, etc. Its verbosity is forgivable (it's still Java after all) for all the pleasant tools it provides for native interop.

I've been waiting for a long time for FFM to land and become stable (the API changed oh so many times), and as of Java 23 it is – so that's what we will use.

How the interop sausage is made

As you should be aware, C is not a language anymore, it's a lot of things, but most importantly it's an Interface Definition Language of the lowest level possible. C structs, functions, and memory layouts no longer serve just the purpose of helping construct working C programs, they are used (and abused) as the primitives in the way our different languages and systems talk to each other when sharing the same memory space.

The fact that this IDL is embedded into C language, which has billions of other features, dialects, flags, attributes, etc., makes it quite difficult to work with, so most tooling just delegates to Libclang from LLVM – a C interface to the bowels of Clang the compiler for C/C++, written in C++. Rust, Swift, Zig, Java, and Scala Native – all rely on Libclang to understand and introspect the binary interface definitions embedded into C header files under the guise of normal C structures and functions.

The takeaway from this is that the "best" interface to choose for native interop is a C header file. This severely limits the expressiveness of the interface (I covered that in my project for bridging Scala Native and Swift), as C is not a very expressive language, but at least it's reasonably performant.

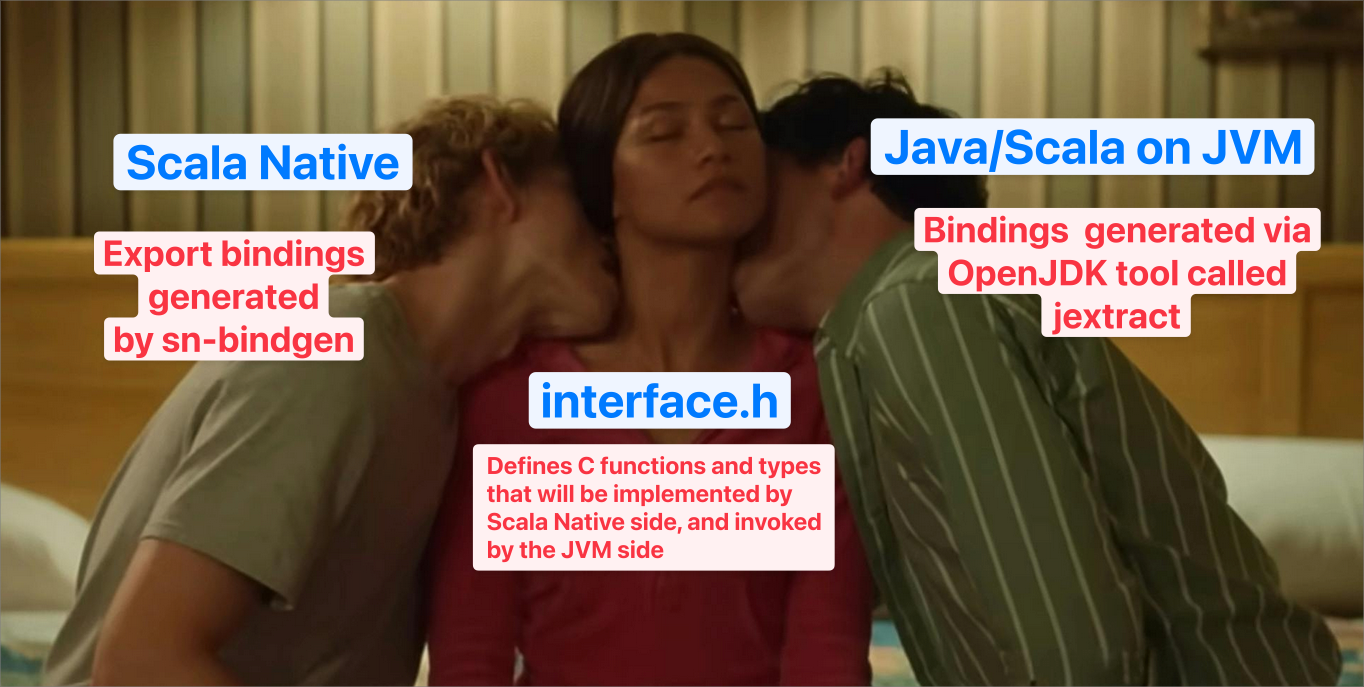

Here's the most fitting illustration I could come up with that describes our interop sandwich in reasonable detail.

Given its importance, let's start with our interface definition and describe what our program will be doing.

Shared C interface

Our program is very simple: it exposes a single function to run some floating point operation, and each invocation can be labelled with a string. All of our C code will go into interface.h.

First, we'll allow only addition and multiplication:

typedef enum { MULTIPLY = 1, ADD = 2 } myscalalib_operation;

The options will go into a struct:

typedef struct {

myscalalib_operation op; // type of operation

char *label;

} myscalalib_config;

And finally, the function takes an options struct and two arguments:

extern float myscalalib_run(myscalalib_config *config, float left, float right);

That's it! That's the interface.

This is our source of truth – now we need to transform that into code in Scala and Java that uses their respective interop features to describe this C interface, so that the Scala Native implementation can align with the Java code that invokes it.

Scala bindings and implementation

As Scala will serve as the implementor in this interop sandwich, we will use sn-bindgen's Exports mode. All it will do is generate definitions for various structs and enums as normal, but functions will be generated as stubs, to be implemented by the user. In particular, for our interface it will generate a trait like this:

trait ExportedFunctions:

import _root_.myscalalib.enumerations.*

import _root_.myscalalib.predef.*

import _root_.myscalalib.structs.*

/**

* [bindgen] header: interface.h

*/

def myscalalib_run(config : Ptr[myscalalib_config], left : Float, right : Float): Float

and a @exported stub that references a user-defined implementation:

object functions extends ExportedFunctions:

import _root_.myscalalib.enumerations.*

import _root_.myscalalib.predef.*

import _root_.myscalalib.structs.*

/**

* [bindgen] header: interface.h

*/

@exported

override def myscalalib_run(config : Ptr[myscalalib_config], left : Float, right : Float): Float =

myscalalib.impl.Implementations.myscalalib_run(config, left, right)

This myscalalib.impl.Implementations is what we must provide to make the project compile.

The command to generate the bindings looks like this:

sn-bindgen --header interface.h \

--package myscalalib \

--export --flavour scala-native05 \

--scala --out scala-native-side/interface.scala

In our case the implementation is quite simple (we are using Scala CLI), we'll just use Scribe logging library to trace every single operation request:

//> using platform scala-native

//> using scala 3.6.3

//> using nativeVersion 0.5.6

//> using nativeTarget dynamic

//> using dep com.outr::scribe::3.16.0

package myscalalib.impl

import scalanative.unsafe.*

import myscalalib.all.*

object Implementations extends myscalalib.ExportedFunctions:

def myscalalib_run(

config: Ptr[myscalalib_config],

left: Float,

right: Float

): Float =

val cfg = !config

val label = fromCString(cfg.label)

if cfg.op == myscalalib_operation.ADD then

scribe.info(s"[$label] $left + $right = ${left + right}")

left + right

else if cfg.op == myscalalib_operation.MULTIPLY then

scribe.info(s"[$label] $left * $right = ${left * right}")

left * right

else ???

Important things to call out:

//> using nativeTarget dynamicinstructs Scala CLI to build a dynamic library, instead of a binary application- Note how we cannot exhaustively match on the values of a C enum – because enums are a lie! They only exist as numbers at runtime, and Java's interop features just directly erase enums to be numbers even at compile time, so you can pass any value

- This

???is a big problem – Java will not understand exceptions thrown by Scala Native. Fixing this would require encoding error handling in one of C ways (e.g. return error code and put result into a pointer, or set a passed*errpointer to non-null), we won't do that for simple example.

And that's it for the bindings and the Scala Native implementation. Let's build it into something Java can load.

Building Scala Native library

All we need to do is run scala-cli package . -f -o ./scala-lib.dylib (.dylib on MacOS, .so on Linux), and now we have a lovely dynamic

library that Java can load – and the nice thing about dynamic libraries is that Scala Native creates a sort of a "constructor" into the

library that initialises the Scala Native GC, meaning we don't need to call ScalaNativeInit before using any Scala code.

jextract for Java bindings

jextract is a binding generator from OpenJDK team targeting FFM exclusively, also built on top of Libclang.

Once you download it and have it available on your PATH, it's quite easy to invoke:

jextract --output java-side/ \

--target-package myscalalib_bindings \

interface.h

This will generate some Java files in java-side/ folder that contain all the interop definitions in the myscalalib_bindings package.

Java implementation

First thing we need to do is load our dynamic library – for this example, let's just assume the path to it is passed as one of the arguments to the Java app. We are using Scala CLI to run Java side as well, so here's what the loading logic looks like:

//> using jvm 23

//> using javaOpt --enable-native-access=ALL-UNNAMED

import myscalalib_bindings.*;

import java.nio.file.Paths;

import java.lang.foreign.*;

public class Main {

public static void main(String[] args) {

// Load the dynamic library

String dylibPath = null;

if (args.length != 1) {

System.err.println("First argument has to be path to dynamic library");

System.exit(1);

} else {

dylibPath = args[0];

}

System.load(Paths.get(dylibPath).toAbsolutePath().toString());

/* Work with native methods here*/

}

}

- We are explicitly setting JVM to be 23

- Adding

--enable-native-access=ALL-UNNAMEDavoids a warning at runtime System.load(Paths.get(dylibPath).toAbsolutePath().toString());– this actually loads a dynamic library from disk. The location has to be an absolute path

Note that the SBT template I made for this project unpacks the dynamic library from the resources, which is much closer to the real life usage, even though it adds some build and runtime complexity.

And now, the culmination of our journey – let's use the methods we defined in Scala Native to write a program in Java!

try (Arena arena = Arena.ofConfined()) {

var config = myscalalib_config.allocate(arena);

myscalalib_config.label(config, arena.allocateFrom("First test"));

myscalalib_config.op(config, interface_h.ADD());

interface_h.myscalalib_run(config, 25.0f, 150.0f);

myscalalib_config.label(config, arena.allocateFrom("Second"));

myscalalib_config.op(config, interface_h.MULTIPLY());

interface_h.myscalalib_run(config, 50.0f, 10.0f);

}

One thing you can immediately notice is a bit of verbosity, but otherwise this is usable!

And when we run our Java app with Scala CLI:

cd scala-native-side && scala-cli package . -f -o ../scala-lib.dylib # 1

Wrote /Users/velvetbaldmime/projects/keynmol/scala-native-from-java-scala/scala-lib.dylib

cd java-side && scala-cli run . -- ../scala-lib.dylib # 2

Compiling project (Java)

Compiled project (Java)

2025.02.15 14:01:20:558 main INFO myscalalib.impl.main.myscalalib_run:16

[First test] 25.0 + 150.0 = 175.0

2025.02.15 14:01:20:558 main INFO myscalalib.impl.main.myscalalib_run:19

[Second] 50.0 * 10.0 = 500.0

- Build the Scala Native library

- Pass it to the Java app as the first argument

It works!

Try it for yourself in the example repo by running make run.

sbt-jextract

I planned to just release the small repository I made with Scala CLI and a SBT template, but in the process of working on the template I realised that I can't in good conscience ask the user to install jextract somewhere.

So that's how sbt-jextract was born – a small SBT plugin that bootstraps jextract, and handles things like caching and regeneration. The interface mirrors the SBT plugin I made for sn-bindgen, so through egregious amount of copypasta I was able to knock it out in a couple of hours and it works well.

Conclusion

Apart from questionable lack of type safety in some areas (everything just gets lowered down to MemorySegment and/or primitive types?!),

jextract seems to be solid – and I do like the general design of FFM, Scala Native can borrow some parts of it for stronger interop features.

Making the two very languages and platforms kiss was surprisingly easy with the existing tooling, so I'm optimistic that in the future people will be able to come up with legitimate usecases for this sort of work.